3 minutes

LoRA - low rank adaption explained in three minutes

Introduction

LoRA (Low-Rank Adaptation of LLMs) is a technique that focuses on updating only a small set of low-rank matrices instead of adjusting all the parameters of a deep neural network . This reduces the computational complexity of the training process significantly.

LoRA is particularly useful when working with large language models (LLMs) which have a huge amount of parameters that need to be fine-tuned.

The Core Concept: Reducing Complexity with Low-Rank Decomposition

The Big Idea: Reducing complexity via low-rank decomposition

That sounds like a mouthful, but in fact is quite simple.

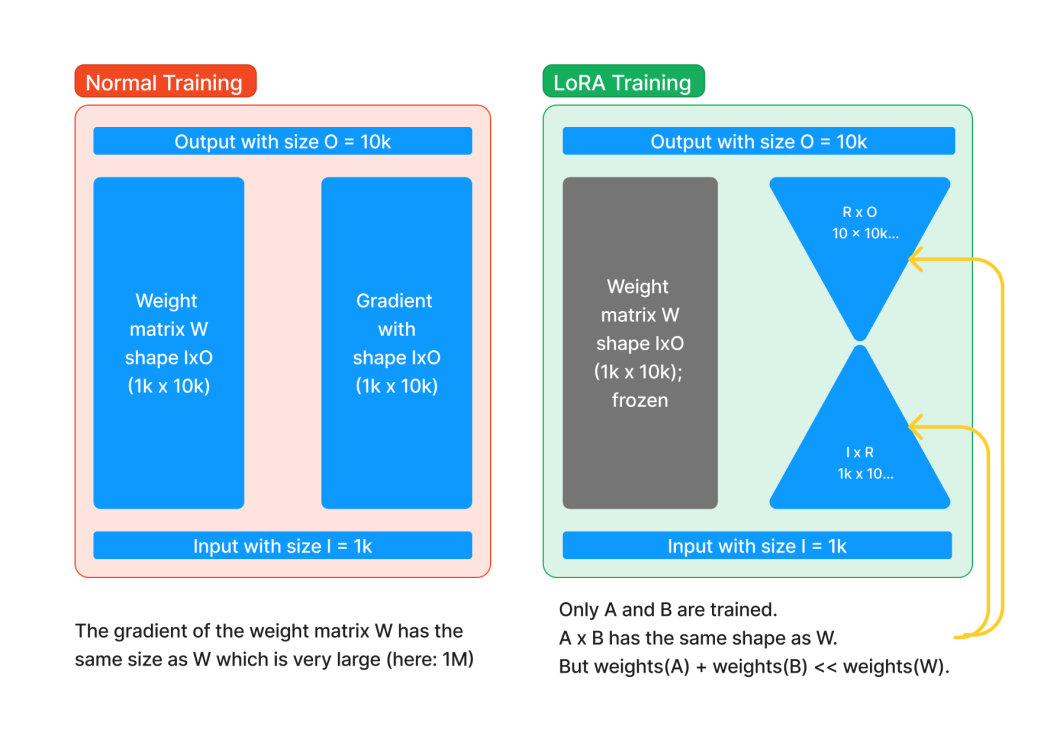

Let’s consider a weight matrix W of size IxO (you can think of a fully connected layer with I inputs and O outputs) where I=1000 and O=10000. This matrix has 10 million (10M) parameters.

Normally, you would fine-tune all of these 10M parameters during backpropagation.

The idea of LoRA is that instead of doing that, you create 2 additional matrices called A and B where A is of size IxR and B is of size RxO. R here stands for the rank of the matrix and the smaller R the smaller the matrices.

Illustration of LoRA in comparison to normal training, created by Marc Päpper.

Note that when multiplying A and B you get a IxO matrix again.

But let’s choose R=10. Then A is of size 1000x10 and B of size 10x10000, so together they have 110.000 parameters, so around 1% of the original matrix W.

Then for fine-tuning, you simply freeze the original weight matrix and only add another component which consists of A and B multiplied with our input, so you only need to fine-tune these 110.000 parameters.

Putting theory into code

Let’s translate this theory into code with a simple implementation of LoRA using PyTorch:

This code showcases the simplicity of integrating LoRA, freezing the original linear layer while updating only matrices A and B during training.

There are some more tweaks you can do such as to also merge the LoRA (AxB) weights to the original weight matrix in the end etc. For more details, check out the original paper code (here).

Note that we initialize A as we typically do with the He initialization, but B is initialized with zeros, so AxB becomes zero and the behavior of the original layer is not changed at first.

This should help you grasping the main idea of LoRA and how it is implemented. If you want to dive deeper, refer to the paper and code links above.