7 minutes

Interactive visualization of stable diffusion image embeddings

A great site to discover images generated by stable diffusion (or their custom model called aperture) is Lexica.art.

Lexica provides an API which can be used to query images matching some keyword / topic. The API returns image URLs, sizes and other things like the prompt used to generate the image and its seed.

The goal of this blog post is to visualize the similarity of images from different categories in an interactive plot which can be explored in the browser.

Lexica’s API

So let’s start off by getting images of a certain concept from Lexica:

The API is straigthforward - you just make a GET request for the concept you are interested in and you receive a list of matching images.

With this implementation, we can for example call get_image_list_for('dogs', n=5) to get Lexica’s info on 5 dogs.

A single entry of one of these dog entries would look like this:

{'id': '001b5397-...', 'gallery': 'https://...', 'src': 'https://...', 'srcSmall': 'https://...', 'prompt': 'a dog playing in the garden', 'width': 512, 'height': 512, 'seed': '3420052746', 'grid': False, 'model': 'stable-diffusion', 'guidance': 7, 'promptid': '394934d...', 'nsfw': False}

The gallery entry is Lexica’s URL to the gallery of this image where several other related images are shown, the src and srcSmall are the actual image URLs in two different qualities, seed, model, prompt and guidance are details about how the image was generated.

Download of images

Then let’s write some code to download the actual images from the src entries and also save them with the name of the concept plus some indexing number:

So now when we call download_concept('dogs', n=5) the function will download the first 5 dog images which Lexica returns to us and store them as dogs0.jpg, dogs1.jpg etc. In addition, we also store the full API result in dogs.json.

Embedding the images with CLIP

To check how similar images are, we can embed them using the CLIP model which represents each image with 512 numbers. Then using those embeddings, we can cluster them and see which ones are actually similar to one another.

So let’s setup CLIP after installing some dependencies (pip install -U torch datasets transformers):

Now we have a method embed_images which takes a list of PIL Images and returns the embeddings of each image.

We can combine downloading images and embedding them:

When we call download_and_embed('dogs', n=5) we will use the Lexica API to download 5 images of dogs, save them to disk, run them through the CLIP model and save their embeddings as dogs.pt.

Data Download

So we are almost there now, we just need to download a couple different categories to make it more interesting:

After this, we have 10 images for each of our 12 categories with their embeddings stored locally. Great!

Now comes the fun part: visualization.

Visualization t-SNE

To visualize, we will take all of our embeddings and put them together into a single embedding data structure, so that we can then compute their similarities:

All embeddings are stored in the variable all_emb which is of size (120, 512) - 120 images with 512 embedding dimensions.

Note that we normalized by the l2_norm to have their values be easily comparable.

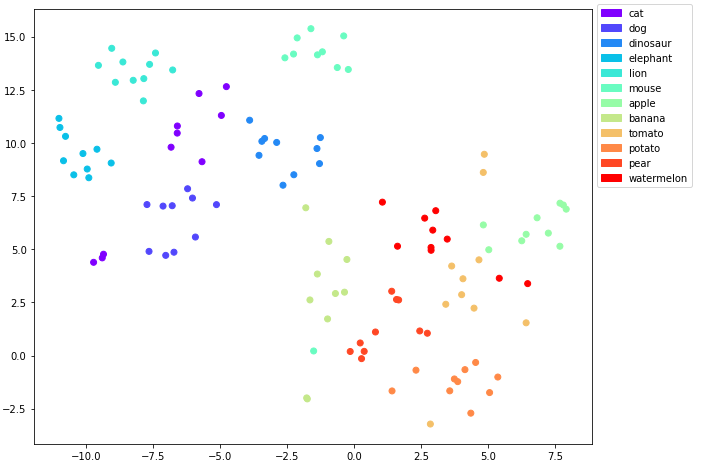

Let’s check out the t-SNE plot of this data structure:

t-SNE plot of the embeddings with the classes as labels

Ok good, there is some overlap here and there for example between cats and dogs and for some of the edibles, but there are some clear clusters as well.

To have a comparison with t-SNE let’s also consider a UMAP plot after installing it with pip install umap-learn:

Visualization UMAP

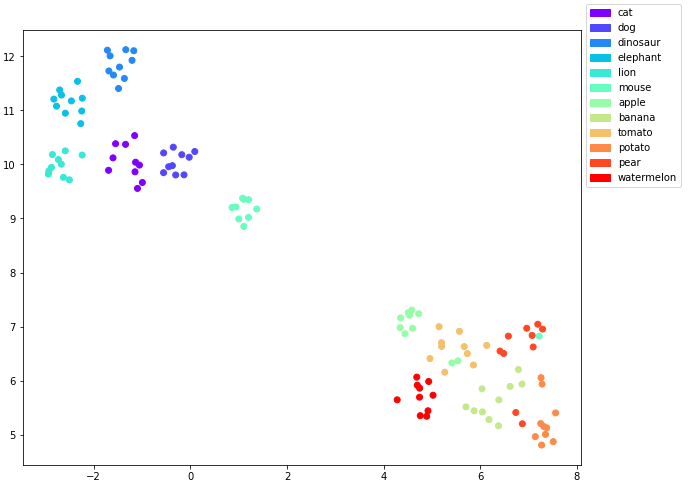

UMAP plot of the embeddings with the classes as labels

So that looks even a bit cleaner than the t-SNE plot. All the animal clusters are clearly separated in the upper left of the graph while the edibles are in the lower right.

For the edibles there is still some overlap between some of the points.

It would be interesting to figure out which images are overlapping here to get a better understanding about this. What we are missing to do at the moment is to figure out which image belongs to which data point. And it would really be nice to see the images corresponding to our data points directly in our visualization.

So let’s make it more interactive!

Interactive visualization with Bokeh

To do so, we will first base64 encode the images, so we can embed them in HTML:

And then we use bokeh to make a cool interactive plot:

Try it out yourself!

When you hover over a data point, you will be able to see the stable diffusion generated image corresponding to it as retrieved from Lexica (you can also open it as its own page here):

Embeddings analysis

Now that we can identify how the images look like, we can have a closer look at the cluster outliers:

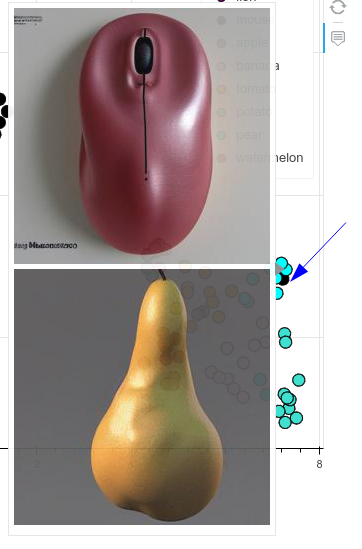

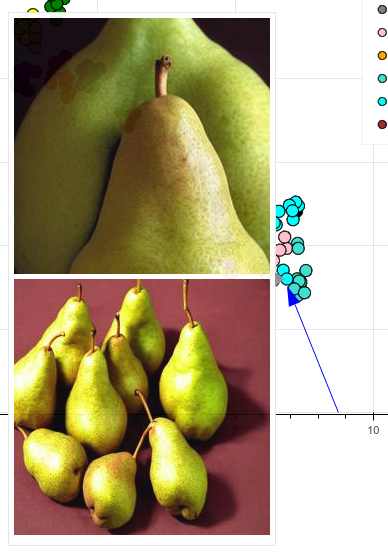

- A single mouse appears clustered together with the pears. It turns out that it’s a computer mouse and its shape resembles a pear a little bit:

Mouse found inside the pear cluster

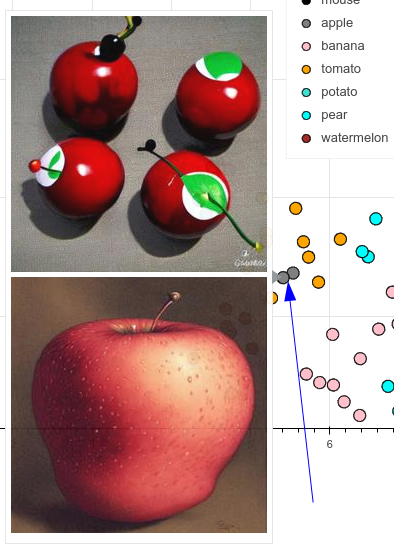

- Some apples get confused with tomatoes - if they are red and round, kind of makes sense:

Some apples look like tomatoes

- Two pears dance out of line and get clustered closer to potatoes and bananes than their pee(a)rs, not really sure why (if you have an idea, please comment):

Pears dance out of line

Overall, I’m quite impressed that the semantic space is so well represented and concepts cluster quite tightly together.